Michael Steiner

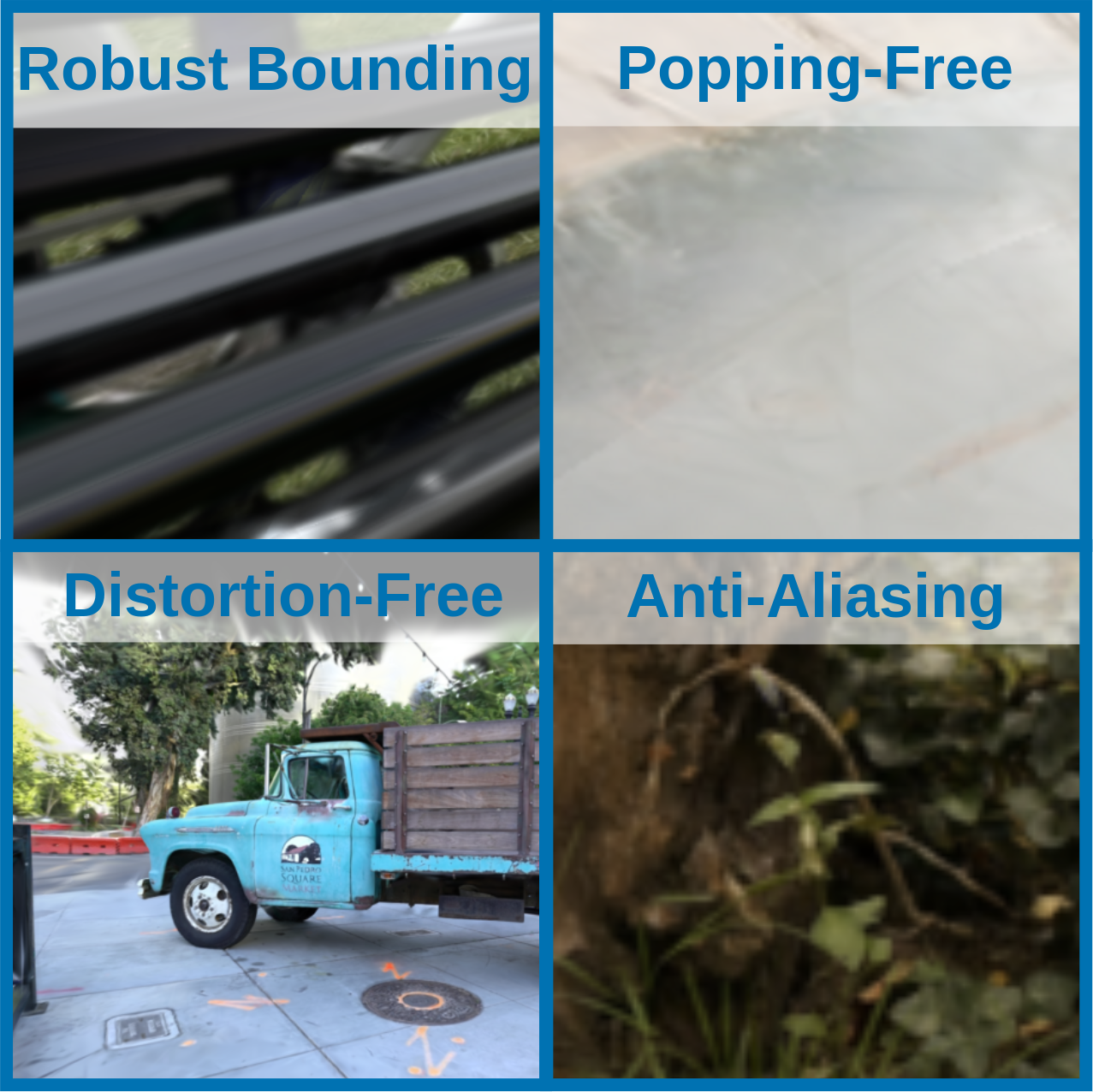

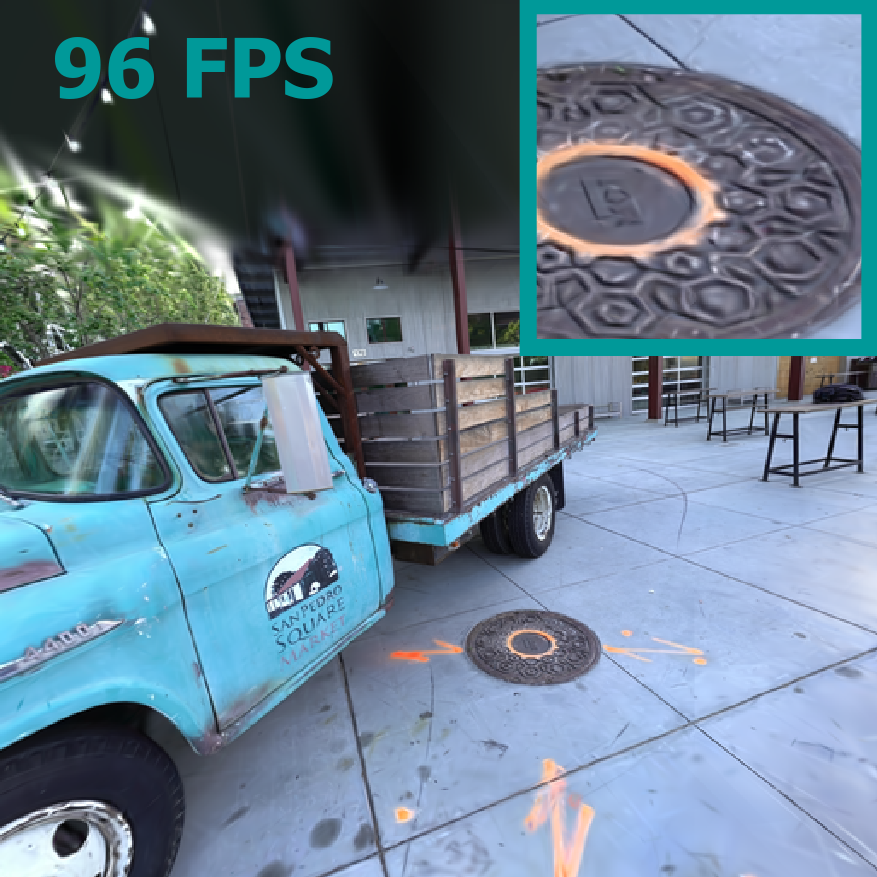

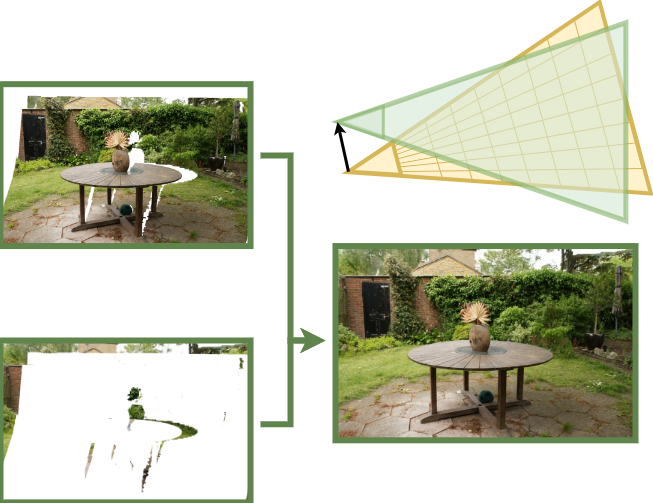

I am currently a PhD student at Graz University of Technology at the Institute of Visual Computing (IVC), supervised by Markus Steinberger. Most of my recent work focused on novel view synthesis and neural rendering. My personal research interests lie in Visual Computing, Machine Learning, and all topics concerning Parallel Compute in general.

In 2023 I received my Master's Degree (with distiction) for Computer Science at Graz University of Technology, with a Major/Minor in Visual Computing/Machine Learning.